Question:

We own several different brands of direct reading gas detectors. Different manufacturers seem to use different concentrations of gas to calibrate their instruments. Is there a rule of thumb regarding the best concentration of the calibration gas? Also, do I need to use different concentrations of gas for bump tests?

Answer:

At GfG the rule of thumb is to use a calibration gas concentration that is near 50% of the full linear range of the sensor. Part of the rationale is that testing a sensor at 50% of full range verifies that the sensor still has enough remaining reaction efficiency to handle higher gas concentrations within the performance specifications of the sensor. This is similar to why a medical examination frequently includes putting the patient on a treadmill for a “stress test”. You may not get the complete picture if all you do is test the patient’s blood pressure while sitting still.

However, you can generally use any concentration within the linear range of the sensor. Sometimes the stability and availability of the calibration gas dictates the concentration we use. For chlorine we generally use 10 ppm gas to calibrate the sensor, even though the full linear range of the sensor is only 10 ppm. (For the chlorine sensor the over-limit concentration is about 12.5 ppm.) Sometimes the odor or toxic nature of the gas determines the concentration. For instance, the standard ranges for GfG H2S sensors are 0 – 100 ppm or 0 – 500 ppm. However, we normally use 20 ppm H2S to calibrate the sensor.

Calibrating with a gas concentration near the alarm concentration is not necessary. I’ve used H2S sensor calibration in the following example.

Calibration is normally a two-point adjustment procedure. In the first step the sensor is “fresh air” zero-adjusted in atmosphere that contains no measurable contaminants. In the second step the instrument is “span” adjusted using calibration gas that contains a precise concentration of the toxic gas. Most calibration gas is manufactured and packaged by specialty suppliers to traceable reference standards. The accuracy and the dating (shelf-life) over which the accuracy statement applies are normally printed on the cylinder label. Several calibration gas manufacturers offer H2S calibration gas with ±3.0% accuracy with 6-month shelf life dating. They also offer ±10.0% accuracy with up to two-years shelf life dating.

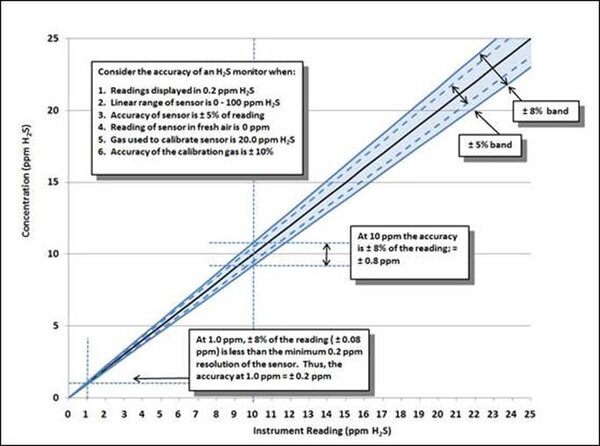

The accuracy of the reading is determined by the accuracy of the sensor (= ±5% of reading) plus the effects of the accuracy of the calibration gas (= ±3% of reading). The effect of these relationships on the accuracy of readings can be illustrated graphically.

The instrument is calibrated using 20 ppm H2S calibration gas. The minimum resolution of the H2S sensor in this example is set at 0.2 ppm. The full linear range of the H2S sensor of 0 – 100 ppm.

At 20 ppm the accuracy of the properly calibrated sensor is ±8% of 20 ppm = ±1.6 ppm. When the instrument is exposed to 10 ppm H2S, the accuracy of the reading = ±8% of the reading, which is ±0.8 ppm.

When the instrument is later exposed to 1.0 ppm however, the concentration is much closer to the minimum unit of resolution. Since 8% of 1.0 ppm = 0.08 ppm, (which is less than the minimum unit of resolution); at 1.0 ppm the accuracy of the reading becomes ± 0.2 ppm.

So even though 20 ppm gas calibration gas is used to adjust the sensor in this example, at 1.0 ppm the accuracy of the reading is still ±0.2 ppm (the minimum resolution of the sensor).

Part of the reason for performing a bump test is to verify that the sensors properly respond when exposed to test gas. However, an equally important part of the test is to verify that the instrument alarms and indicators work properly when the instrument is exposed to gas. The best practice when performing a bump test on a direct reading portable gas monitor is to use concentrations of gas high enough to test the function of both the “low” (A1) and “high” (A2) peak concentration gas alarms for all the sensors installed in the instrument. The gas concentrations should minimally be high enough to at least activate the A1 “low” peak alarms.

Fresh air contains 20.9% oxygen. During the fresh air AutoCal procedure the oxygen sensors in GfG instruments are automatically adjusted to match this concentration. In North America the A1 (descending) peak alarm is normally set at 19.5%. When the concentration drops below this concentration, the first (A1) alarm is activated. In North America the second (A2) descending alarm is normally set at 18%. The standard GfG “Quad Mix” calibration gas normally used to test G450 and G460 instruments in North America includes 50% LEL combustible gas, 200 ppm CO, 20 ppm H2S and 18% O2. The concentration in this mixture is low enough to activate the second descending (A2) oxygen deficiency alarm. However, even for customers who set the A2 alarm at 17%, I suggest using calibration gas that contains at least 18% oxygen, as using a concentration of oxygen lower than 18% can have an effect on the accuracy of the calibration of the LEL sensor. (It’s very easy for customers who set the descending A2 alarm for oxygen at 17% to test the alarm by exhaling on the sensor.)

GfG Application Note “AP1007 Calibration Requirements for Direct Reading Portable Gas Monitors” explores this issue in greater detail, and provides the procedures and definitions that are used in North America. The note is posted on our website in the “Support Materials” section along with the other Application and Technical notes.

Thank you for the question.